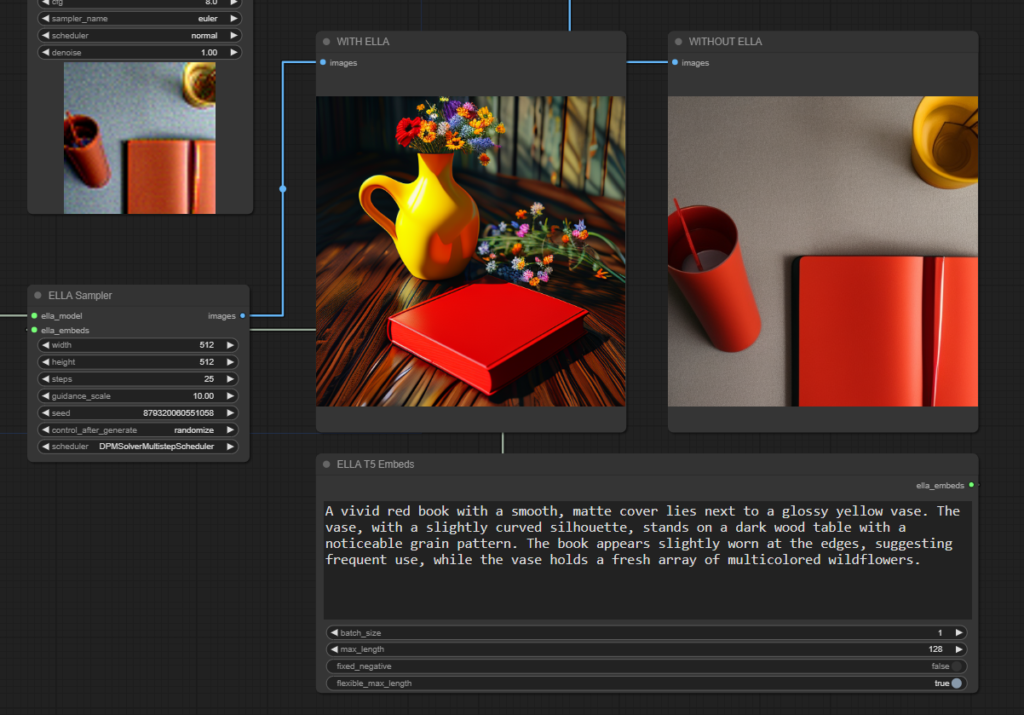

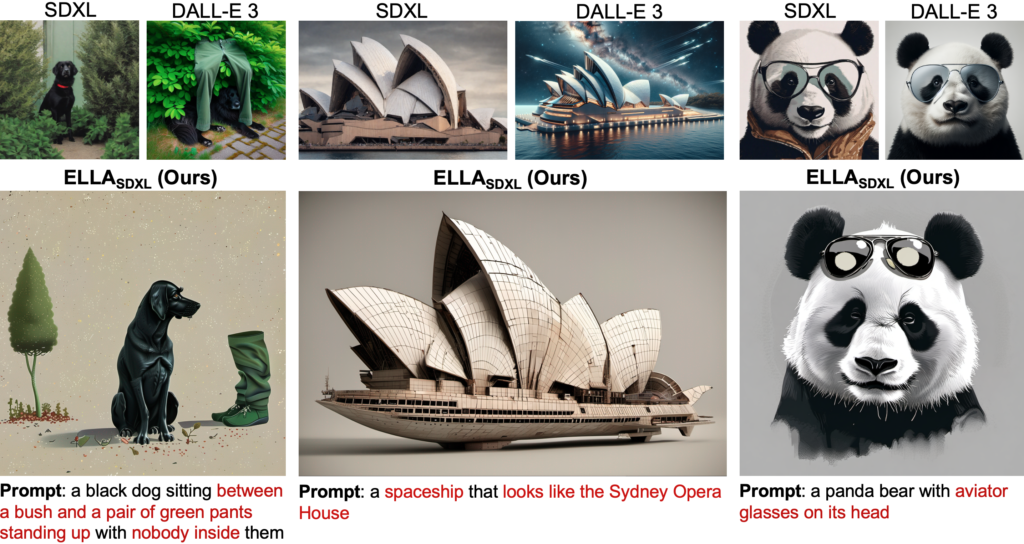

Diffusion models have demonstrated an exceptional ability to generate images from text, however, most of these models still use CLIP as the text encoder, which limits their ability to understand dense prompts, which include multiple objects, detailed attributes, complex relationships, and long text alignment. To address this problem, ELLA: Equip Diffusion Models with LLM for Enhanced Semantic Alignment was introduced.

ELLA is an efficient adapter of large language models (LLMs) that enhances CLIP-based diffusion models with powerful LLMs to improve text alignment without the need to train U-Net or LLMs. This approach uses a wide range of semantic alignment connector designs to smoothly join two pre-trained models and proposes a new module, the Timestep-Aware Semantic Connector (TSC), which dynamically extracts timestep-dependent conditions from LLM.

The TSC adapts semantic features at different stages of the denoising process, assisting diffusion models in understanding long and complex prompts during the sampling process. ELLA can be easily incorporated with community models and tools to improve their ability to follow prompts. To evaluate text-to-image models in terms of following dense prompts, the Dense Prompt Graph Benchmark (DPG-Bench), a challenging benchmark consisting of 1K dense prompts, was introduced.